Who Are Voice Actors?

In their routine professional activities, voice actors work across a range of sectors such as, commercials and advertising, followed by audiobooks, animation and cartoons, and E-Learning and educational content, video games, and podcasting and audio dramas, dubbing and localization, live performance and theatrical productions, each showing high demand across the industry.

In another way, voice actors and contributors are well-known voices because of their foundational role in the development of modern speech technologies. A notable innovation was the early large-scale audio datasets, LibriSpeech, derived from thousands of contributions to LibriVox and other public domain audiobook platforms, underpinned early breakthroughs in automatic speech recognition and the voice assistants we use today. These contributions, originally made in the spirit of open knowledge and accessibility, have since been repurposed into commercial AI pipelines often without consent, attribution, or safeguards [5]. A decade later, these same contributions have exposed voice actors to a range of harms and may automate, devalue, or displace the very actors who created them.

From our list of 20 participants, we created four personas based on years of experience and availability of resources. Four significant personas are: (a) Emerging Professionals (Low experience, Low resources); (b) Solo Defender (High experience, Low resources); (c) Delegator (Low experience, High resources); (d) Strategist (High experience, High resources). For instance, common traits of delegator personas include more than 5+ years of experience, strong home studio setups, relying on direct client relationships with some occasional platform work, proactive in using AI riders from NAVA and often opting out if the client disagrees. Often, they have their own representative to assess contracts and negotiate client compliance with AI clauses. This group holds deep concerns over AI risks, especially unauthorized cloning, unauthorized voice usage for AI training.

PRAC³: A New Way to Understand Risk of Voice Data

Voice data isn't just audio; it's biometric, meaning it can uniquely identify you. From audiobook narrators to anime dubbers, voice actors now face threats such as: Unauthorized cloning and deepfakes, Unpaid reuse across platforms, Hidden AI clauses in contracts, Perpetual rights grabs without clear compensation, and use of voice in erotic content.

From the interview, the original male voice of a voice assistant tool of major tech, recounted how a one-time session and a yearly non-compete fee evolved into widespread, unauthorized use of his voice.

Voice actors also found their voice being mismassed to create AI-generated voice content in controversial media such as, political, controversial media. A case –

Some also feared their voices could be embedded in propaganda or defamatory content, with no clear mechanism for recourse or correction. This lack of control over one's digital likeness raises questions about the professional and personal boundaries in the age of generative AI. Another incident which a TikToker used a voice sample from voice actor's website to create a reel:

Beyond the concerns of accountability, they had concerns of professional and economical reputation, such as, their voice association with low-quality productions or cloned by individuals could damage his credibility, as audiences might conflate the synthetic performance with the original artist. Another instance where there were incidents of opacity of content distribution chains and the inadequacy of existing legal measures

Based on the real-life scenarios (past and perceived future risks) from voice actors, we build the PRAC³ framework in assessing harms that emerge over time and beyond contractual boundaries. Voice actors experience three archetypal threat scenarios that encapsulate both direct and downstream risks. These scenarios highlight how harm is not limited to the moment of data creation but often arises through redistribution, secondary use, and platform-driven commodification.

- Voluntary, non-monetary contributors: Actors donate voice data for public good, only to have it later surface in unauthorized commercial tools.

- Monetized contractual contributors: Initial legal agreements include ambiguous language often enabling resale, transfer, or indefinite reuse of voice data, especially following corporate changes.

- Secondary, informal misuse: Legally recorded voices leak into meme culture, satire, or political propaganda via AI tools, distorting public perception and damaging actors' professional standing.

PRAC³ stands for "Privacy, Reputation, Accountability, Consent, Credit, Compensation". Each dimension represents a critical vector of exposure or harm for voice actors in the AI data economy. Consent, Credit, and Compensation present foundational rights which often overlooked or bypassed in AI data pipelines. newly added components from voice actor's experience: Privacy which presents breaches of biometric identity through cloning or surveillance; Reputation, which represents harm from voice misuse in misaligned, offensive, or deceptive contexts and finally; Accountability which presents legal and technical gaps in traceability and recourse when voice actors data is misused by adversarial actors and harm general users.

Each PRAC³ component tackles a different threat:

- Privacy: Can your voice be cloned or tracked?

- Reputation: Will your voice be used in inappropriate content?

- Accountability: Can you find out who misused your data?

- Consent, Credit, Compensation: Are you asked, acknowledged, and paid?

| ID | Scenario | Incident (Participant) | Analysis using PRAC³ Framework |

|---|---|---|---|

| 1 | Audition sample reused in national commercial | P17 discovered her voice in an ad she never recorded (P17) |

PRAC³ Domain: Consent, Compensation, Accountability Threat Agent: Client/Studio Asset at Risk: Voice data, creative labor Potential Impact: Unauthorized commercial use; loss of income; reputational risk Mitigation Status: None – discovered post-facto |

| 2 | Voice used in AI-generated adult content | Game mod used AI to create pornographic scenes with actor's voice (P7) |

PRAC³ Domain: Reputation, Consent, Accountability Threat Agent: Third-party modders Asset at Risk: Public persona, moral integrity Potential Impact: Defamation; emotional distress Mitigation Status: Unreported; no recourse |

| 3 | Exhibit A clause allows post-production cloning | Audiobook contract allowed voice replication without notice (P4) |

PRAC³ Domain: Consent, Compensation, Accountability Threat Agent: Publisher Asset at Risk: Voice likeness; residual earnings Potential Impact: Job displacement; IP erosion Mitigation Status: Discovered post-signing |

| 4 | AI voice scam using child’s cloned voice | Scam calls using cloned voice of loved one (P16) |

PRAC³ Domain: Privacy, Identity, Accountability Threat Agent: Cybercriminals Asset at Risk: Biometric identity Potential Impact: Financial fraud; emotional harm Mitigation Status: Hypothetical/precautionary |

| 5 | Podcast platform AI-translates and clones voice | Spotify translated podcaster’s voice without opt-out (P19) |

PRAC³ Domain: Consent, Privacy, Accountability Threat Agent: Platform provider Asset at Risk: Voice data; linguistic identity Potential Impact: Unconsented speech generation Mitigation Status: Actor manually obstructed usage |

| 6 | No disclosure of voice reuse for AI training | P4 reported clause only found post-distribution |

PRAC³ Domain: Consent, Privacy, Compensation Threat Agent: Client Asset at Risk: Voice training data Potential Impact: Unpaid AI training use Mitigation Status: No consent captured |

| 7 | AI-generated voice used in foreign language translation | Spotify used AI to translate podcaster's voice without clear opt-in (P16) |

PRAC³ Domain: Privacy, Consent, Accountability Threat Agent: Platform Asset at Risk: Voice identity; language authenticity Potential Impact: Loss of control over voice use, misrepresentation Mitigation Status: Voice actor manually obstructed feature with background audio |

| 8 | Audition samples used without hiring actor | Actors heard their audition voices in released work (P14, P16, P17, P18, P20) |

PRAC³ Domain: Consent, Compensation, Credit Threat Agent: Client/Producer Asset at Risk: Audition recordings; performance data Potential Impact: Unpaid labor; reputational confusion Mitigation Status: Typically undiscovered until after release |

| 9 | Voice used in modded game porn content | AI-generated adult content using voice actors' characters (P7) |

PRAC³ Domain: Reputation, Privacy, Accountability Threat Agent: Third-party users Asset at Risk: Character alignment; public image Potential Impact: Moral distress; brand damage Mitigation Status: No action taken; actors unaware until fans reported |

| 10 | Hidden AI training clauses in audiobook contracts | Exhibit A allowed voice replication post-recording (P4) |

PRAC³ Domain: Consent, Accountability, Compensation Threat Agent: Publisher Asset at Risk: Creative control; residuals Potential Impact: Job replacement by AI; under-compensation Mitigation Status: Clause discovered only post-facto |

| 11 | Client reuses voice clip across projects without permission | P17’s voice reused in ad without consent |

PRAC³ Domain: Consent, Accountability, Credit Threat Agent: Client Asset at Risk: Vocal performance; authorship Potential Impact: Unauthorized branding; reputational risk Mitigation Status: No prior notification; discovered incidentally |

| 12 | Scam calls using AI voice cloning of relatives | Actors fear scammers using their voice for fraud (P3, P16) |

PRAC³ Domain: Privacy, Identity, Accountability Threat Agent: Cybercriminals Asset at Risk: Biometric voice identity Potential Impact: Financial scams; family trauma Mitigation Status: No technical prevention mechanisms |

| 13 | AI contracts lack explicit voice usage limitations | Contracts omit AI voice use clauses (P14, P1) |

PRAC³ Domain: Consent, Privacy, Accountability Threat Agent: Clients/Platforms Asset at Risk: Legal rights over voice data Potential Impact: Non-consensual reuse or AI training Mitigation Status: Actors often overlook contract language |

| 14 | Perpetual license buried in email agreements | Clients assume full rights from email threads (P10, P18) |

PRAC³ Domain: Consent, Compensation, Credit Threat Agent: Clients Asset at Risk: Work ownership; royalties Potential Impact: Lack of residuals; misappropriation Mitigation Status: No formal legal review of communication |

| 15 | Replacement by AI for minor roles or demo work | Lost work for minor roles to AI-generated voices (P14) |

PRAC³ Domain: Compensation, Reputation, Accountability Threat Agent: Clients Asset at Risk: Job opportunities; creative career pathways Potential Impact: Job displacement Mitigation Status: Community advocacy; union action (no technical protection) |

| 16 | Voice licensed and mass redistributed via third-party | Large tech company licensed actor's voice to third-party platforms (P12) |

PRAC³ Domain: Consent, Compensation, Accountability, Privacy Threat Agent: Clients Asset at Risk: Voice data; public image Potential Impact: Ongoing uncompensated use; loss of control; reputational risk Mitigation Status: Attempted renegotiation failed |

Clontract: AI-Powered Risk Assessment for Contract Negotiation

At its core, Clontract is a team of AI agents that work together like a digital legal assistant. Think of it as a legal analyst, risk assessor, and negotiation strategist, all rolled into one but powered by a team of AI agents that talk to each other. Clontract uses a multi-agent architecture to parse, analyze, and advise on voice actor contracts. Clontract is built using Agno, a lightweight framework that enables multi-agent collaboration. Each agent plays a role.

| Agent | Role |

|---|---|

| Coordinator | Orchestrates tasks between agents |

| Analyst | Finds risky or vague clauses |

| Researcher | Pulls in laws, platform policies |

| Strategist | Gives negotiation tips and solutions |

Each agent is equipped with tools:

- A PDF reader for parsing contracts.

- Knowledge retrieval systems from a custom legal corpus (e.g., Amazon ACX, Voice123).

- Text embedding for contextual understanding.

- A web search module for pulling in live policy updates.

- And, of course, a large language model (LLM) for interpretation and advice.

The agents collaborate like a virtual legal roundtable. If a clause says, "voice can be reused in any medium", Clontract doesn't just flag it, it explains why it's risky, which PRAC³ dimensions are involved, and what you could ask for instead. This approach avoids the "one-shot" answer typical of other AI systems. Instead, it mimics a thoughtful, multi-step legal review.

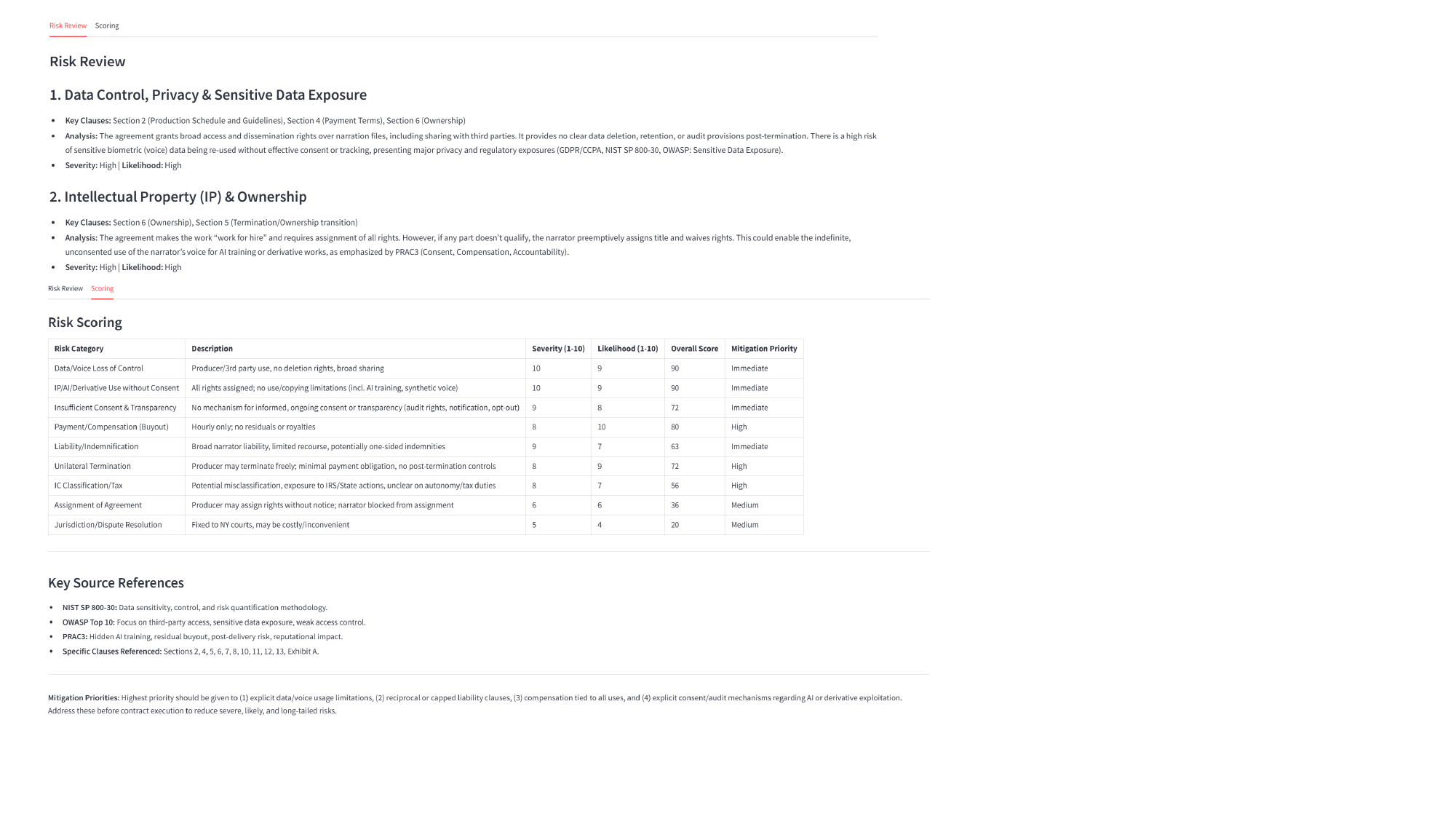

Real World Use: Amazon ACX Contract Example

The researchers tested Clontract on audiobook contracts from Amazon ACX. Here's what it found:

What Did It Do?

- Parsed the full contract.

- Identified AI-related risks like vague usage rights.

- Hidden clauses allowing AI-based voice replication

- Vague language like "in any medium now known or later developed"

- Poor protection against voice reuse without pay

- Applied the PRAC³ framework for categorization.

- Applied NIST and OWASP to score risk levels from 0–100 in each dimension.

- Generated user-facing negotiation advice like:

"Clause 4 allows indefinite use of your voice in AI systems. We suggest requesting a time-bound license (e.g., 1 year) with opt-out rights."

The results were presented in clean markdown reports, with sections for "Key Risks", "Clause Analysis", and "Negotiation Tips" all accessible to someone without legal training.

Real-World Impact: From Passive Victims to Active Agents

Clontract's significance goes beyond technical innovation. We envision it as a catalyst for social impact and industry standardization in the evolving voice economy. Today, most voice actors, particularly freelancers and early-career professionals lack access to legal counsel, union protection, or clear industry guidelines. As a result, they face a constant stream of dense, legally complex contracts, often under time pressure, and are expected to sign away long-term rights, especially regarding AI usage, data licensing, and voice reuse with little clarity or recourse.

While informal community-driven safeguards exist, such as the Nava AI Rider, which some voice actors ask clients to include in contracts, these are far from universal. As our interviews revealed, such riders are often ignored or misunderstood, particularly by anonymous clients on freelance platforms, or by buyers in industries like gaming, e-learning, or television, who may be unaware of evolving ethical norms within the voice community. Without standardized, enforceable practices, these gaps expose voice actors to significant reputational, economic, and identity-related harm.

Clontract and the PRAC³ framework directly respond to this reality by offering a practical, scalable solution:

- Clontract helps voice actors identify and understand AI-related clauses, such as perpetual training rights, synthetic voice reuse, and sublicensing, that are often buried or vaguely worded. By surfacing these risks in plain language, the tool enables more informed consent, and allows actors to push back with confidence or seek modifications.

- The PRAC³ framework repositions voice actors not just as service providers, but as data subjects and rights-holding stakeholders in AI systems. It incorporates Privacy, Reputation, Accountability, Consent, Credit, and Compensation, creating a lens through which actors, clients, and platforms can assess the ethical and professional implications of voice data use.

Together, Clontract and PRAC³ enable:

- Contractual transparency in an otherwise opaque system.

- Structured risk awareness that actors can use to inform their decisions.

- A step toward standardized practices, such as auto-flagging missing AI riders or recommending best-practice clauses, even when dealing with anonymous or international clients.

Future Direction

The current version is proof-of-concept. It hasn't undergone large-scale trials or legal clarification. Areas for improvement include:

- Expanding the Knowledge Base: Current system includes contracts and policy documents from over 21 major voice platforms (e.g., Amazon ACX, Fiverr, Voice123), covering licensing terms, AI and data usage clauses, NDAs, and voice-over agreements. Moving forward, we aim to curate a more diverse set of contract samples from voice actors engaged in non-platform work such as individual projects, collaborations with agents, or contracts from production houses. This will significantly enrich the knowledge base, enabling more context-aware and personalized risk assessments.

- Building a Large-Scale AI Risk Repository: We will develop a comprehensive risk repository with continuous risk reporting from voice actors. We will augment scenario simulation using large language models (LLMs) to generate diverse and anticipatory risk scenarios. The goal is to support the creation of predictive and scalable AI risk assessment models tailored to the voice acting industry and allow long-tailed risk of voice data sharing.

- Evaluation and User-Centered Design: To ensure that Clontract effectively meets its intended goals, we will design and implement a robust evaluation strategy. This will involve assessing both system performance and user experience to ensure alignment with the project's objectives and the evolving needs of stakeholders. With a collaboration with NAVA (National Association of Voice Actors: NAVA) we will involve human legal experts and voice actors for a two-fold evaluation. Continuous feedback loops with users will guide iterative improvements and ensure practical relevance.